As part of Metis’s data science bootcamp we were tasked with investigating a topic related to data science not covered in the bootcamp; I chose learn about OpenAI’s DALL·E. DALL·E is a version of OpenAI’s GPT-3 making it a class of deep learning model called a transformer. While reading about DALL·E I became curious, what is a transformer and what sets it apart from previous models used in natural language processing?

Some backgroud on models before transformers

Let’s first discuss the precursors to transformers, RNNs and CNNs. Recurrent Neural Networks (RNNs) process each word within a text separately and pass the information from the previous word to the next network to process that network’s information. You could visualize this as building a chain, issues arise when that chain of words becomes too long. When this ‘distance’ between the needed relevant information and where it is needed becomes very large RNNs begin to struggle. The creation of Long-Short Term Memory (LSTM) models, a type of RNN, was an attempt to resolve this problem however these models still do not perform as well with longer texts. Since both RNNs and LSTMs sequential processing also prevents parallelization as they process word by word.

To resolve some of these problems researchers developed a technique called Attention. Instead of encoding the whole sentence in a hidden state, each word has its own hidden state that is passed to the decoding process. These hidden states in the decoding stage are used to figure out where the neural network should pay attention. While attention resolves some of the context based issues of RNNs, CNNs address parallelization.

Convolutional Neural Networks can work in parallel because each word of the input can be processed at the same time and do not depend on previous words to be translated. While the ‘distance’ from output and input in RNNs is in the order of N, for CNN’s it is in the order of Log(N). However CNNs still do not resolve the issue of dependencies when translating.

Finally Transfomers!

Transformers use CNNs together with attention models, specifically using self-attention. Each encoder in a transformer is made of two layers: a Self-Attention layer and a Feed Forward Neural Network (FFNN). Each decoder has the same layers but with an Encoder-Decoder Attention layer between the other layers. The Self-Attention layer looks at the token or input word and the entire sequence and attaches understanding from previous words that are relevant to the word and then outputs to the FFNN which is where the model creates its transformed output (translation, next predicted word, etc.). Transformers also use Multihead attention. When translating a word you may pay different attention to each word based on the question you are asking. In a sentence like ‘The dog ran outside because it was scared’, multihead attention helps the model access which word ‘it’ refers to. Positional encoding in transformers accounts for the order of the words in the input sequence. When compared to RNNs, transformers allow for much more parallelization thereby reducing training times which has allowed for the training of much larger datasets than were previously possible.

What’s to interesting about DALL·E?

What’s so interesting and exciting about DALL·E is that instead of creating text from text or a new image from an existing image, it creates images from text. While the concept of generating an image from text may not be a new one the sheer scope of DALL·E’s ability is nothing short of impressive. OpenAI’s blog post on DALL·E describes how the model works as, “a simple decoder-only transformer that receives both the text and the image as a single stream of 1280 tokens—256 for the text and 1024 for the image—and models all of them autoregressively. The attention mask at each of its 64 self-attention layers allows each image token to attend to all text tokens. DALL·E uses the standard causal mask for the text tokens, and sparse attention for the image tokens with either a row, column, or convolutional attention pattern, depending on the layer.”

But what are DALL·E’s capabilities? While the OpenAI blog post has a comprehensive list of DALL·E’s capabilities there are a few that I find particularly interesting. DALL·E has some ability to infer contextual details, this is the model’s ability to render details that were not explicitly mentioned in the text prompt such as shadows.

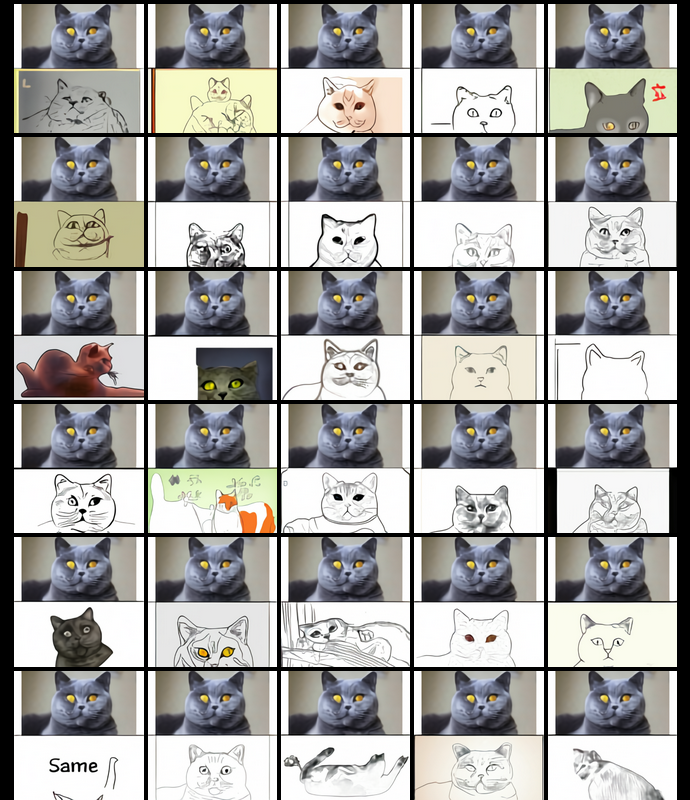

.png)

DALL·E’s ability to combine unrelated concepts into new images ranges from the surrealist combinations of animal and instrument to more realistic images of products that take inspiration from an unrelated concept.

.png)

.png)

DALL·E also exhibits some capability with the zero-shot reasoning present in OpenAI’s GPT-3 model it is based on. Zero-shot reasoning is a model’s ability to perform a task solely from description and a cue to generate the answer supplies in its prompt without additional training.

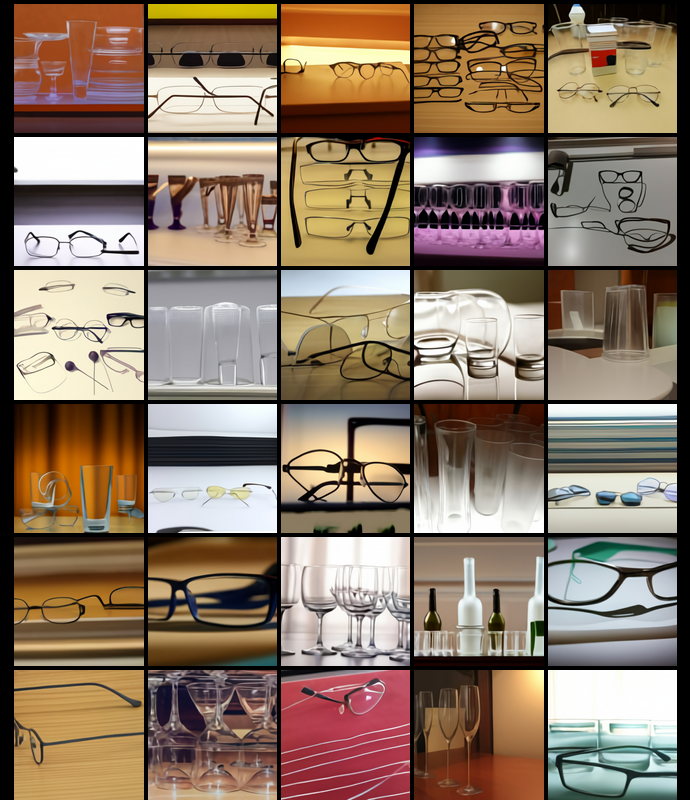

While DALL·E is an exciting advancement in transformers; it is not without flaws or raised concerns. As seen in the text prompt “a collection of glasses is sitting on a table” section of the DALL·E blog post, DALL·E produces images both of drinking glasses and reading glasses showing difficulty with nouns that have multiple meanings.

It also shows difficulty with prompts that have a lot of variable binding where variables may become mixed up or completely excluded from the resulting image.

.png)

With how cutting-edge DALL·E is at the point of writing this post (February 2021, DALL·E blog post released Jan 2021), it isn’t surprising that even with the limited selection of material presented there are still obvious areas of needed improvement with the model. No model is perfect and one as advanced as DALL·E is no exception. What I cannot help but wonder about are the potential implications of models like DALL·E. I find it easy to image DALL·E being used as a method of essentially creating e-commerce deepfakes. Some of the images could easily be mistaken for real-world images, for example some of the avocado chairs look like images of a chair you might see on a furniture website. It is not out of the realm of possibility that someone could scam money from shoppers by listing items for sale generated by DALL·E that look realistic but do not in fact exist. DALL·E is not available for public use at the time of this post, I’m curious to see the additional research OpenAI will conduct and implement to mitigate bias and help prevent unethical usage of DALL·E.

Sources

DALL·E: Creating Images from Text

How Transformers work

The Illustrated Transformer

What is a Transfomer?

Transfomer (machine learning model)

Comments